This page is more technical

This page is a deeper dive into the performance of the Flying Navigation System and is therefore fairly technical.

However, understanding this page is not required for the functionality of the plugin.

Preamble

These numbers were generated from the FlyingNavBenchmark project, available on GitHub.

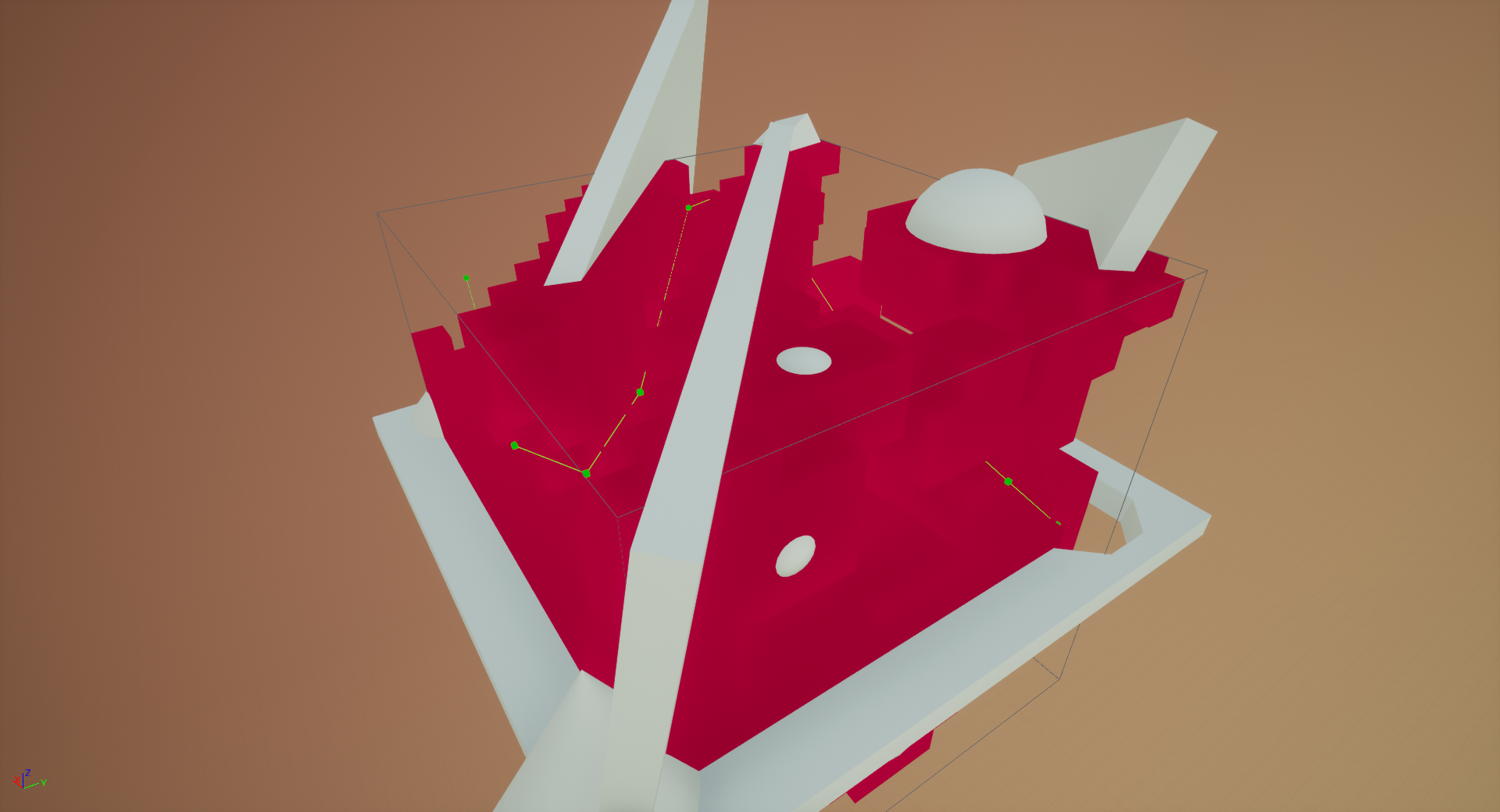

Path with Octree Visualisation

All algorithms are CPU based, and were run on an Intel Core i7 4930K @ 3.9GHz in Windows

10. The project was packaged up in a Shipping build, so this should be running optimised

code.

The octree generation is multithreaded; the pathfinding and raycasting are

singlethreaded.

The tables are designed to illustrate the impact of the various optimisation options for

pathfinding.

Here we test the HeuristicScale, Use Unit Cost and Use

Node Compensation options, testing for A*, Lazy Theta* and Theta* algorithms.

Details of these can be found in the Usage

section.

I have also tested the octree raycast algorithm, and compared it to the standard Physics Line Trace.

I have used Time (in milliseconds), Number of Iterations and

Distance Proportion to measure performance and accuracy. The pathfinding

algorithm explores one node per iteration.

The iterations for Theta* and Lazy Theta* take longer than A* due to the line-of-sight check.

The distance proportion is a measure of how accurate the path is; these optimisation

options take shortcuts and therefore aren't guaranteed to find the shortest path.

The benchmark will compare the optimal path (Theta* with no optimisation) to the found

path, calculating the value with the formula:

int Distance = (FoundPathLength / OptimalPathLength) * 100%

It should be noted that the time column is not a useful measure for comparisons between

tables, as I could not get consistent results between runs.

I used FPlatformTime::GetSeconds() for benchmarking the runtime, which

calls

QueryPerformanceCounter() on Windows (which is apparently accurate), so I

don't know why the time fluctuates as it does.

You will notice this by the fact that fewer iterations does not necessarily mean smaller

runtime between tables, and theoretically the generation time should be identical.

Therefore, I will be using the Iterations counter to compare performance, and the time

column should only be used as an estimate.

Pathfinding Benchmarks

Remarks

The test scene was designed to be a worst-case scenario for A* style algorithms,

in which most of the search space would need to be explored to complete the path.

It should be noted that even on the lowest resolution, a perfectly acceptable path can be found for most flying agents,

so it really isn't necessary to crank up the number of layers as much as one might think.

With only bUseUnitCost = true, we see almost a halving in iterations across the board (around 1.6-1.7x better) with a consistent 10% increase in path distance for the any-angle algorithms.

It's 20-40% for A*, but I believe this is because the extra steps in the path from the optimisation are not smoothed out by the line-of-sight check.

For the 4 layers case, this is pretty much the end of the story. No other optimisations improved on that score.

As the number of layers increase, the other optimisations come into play.

With bUseNodeCompensation = true, we get a similar improvement (1.7x) in the 9 layers case, but no improvement with 4 layers.

However, when using both options, we get a comfortable one third of the original number of iterations for 9 layers.

The middle layers fall proportionally between these examples.

Interestingly, the heuristic scale does not make much of a difference or even hinder the performance when both of these options are turned on,

but that could be due to the nature of the test scene.

The heuristic is not very useful for most of the path journey, but in real scenes in fairly open spaces the heuristic would come into its own.

Complicated mazes such as this don't have as much of a benefit from increasing the heuristic from 1.0.

As you can see, increasing the number of layers drastically increases the pathfinding and generation time.

You can get away with this by using asynchronous pathfinding, but this is not supported with behaviour trees at the moment.

Check out the Usage page for more information.

However, asynchronous processing is still using resources, so a larger max detail size is a better solution.

No Optimisations

HeuristicScale = 1.0bUseUnitCost = falsebUseNodeCompensation = false

# Layers

|

# Voxels

|

Generation

Time (ms)

|

A* |

Lazy Theta* |

Theta* |

| Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

| 4 |

4,096 |

2.60 |

0.91 |

1,513 |

128% |

1.75 |

1,516 |

102% |

2.38 |

1,511 |

100% |

| 5 |

32,768 |

8.10 |

9.28 |

13,203 |

121% |

19.36 |

13,280 |

101% |

32.54 |

13,272 |

100% |

| 6 |

262,144 |

49.41 |

44.85 |

58,446 |

116% |

109.21 |

58,433 |

100% |

193.51 |

58,423 |

100% |

| 7 |

2,097,152 |

262.82 |

171.30 |

205,002 |

113% |

450.36 |

204,842 |

100% |

847.54 |

204,925 |

100% |

| 8 |

16,777,216 |

1102.86 |

834.48 |

901,969 |

112% |

2810.56 |

901,100 |

100% |

5557.71 |

902,426 |

100% |

| 9 |

134,217,728 |

4369.69 |

4457.57 |

4,324,535 |

110% |

21444.74 |

4,320,052 |

100% |

47212.68 |

4,320,212 |

100% |

Unit Cost Nodes

HeuristicScale = 1.0bUseUnitCost = truebUseNodeCompensation = false

| # Layers |

# Voxels |

Generation Time (ms)

|

A* |

Lazy Theta* |

Theta* |

| Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

| 4 |

4,096 |

7.61 |

1.58 |

911 |

151% |

1.99 |

910 |

112% |

2.46 |

910 |

111% |

| 5 |

32,768 |

12.58 |

4.69 |

7,264 |

149% |

9.87 |

7,232 |

109% |

17.11 |

7,233 |

107% |

| 6 |

262,144 |

56.29 |

18.29 |

33,058 |

157% |

51.83 |

32,631 |

110% |

101.95 |

32,637 |

110% |

| 7 |

2,097,152 |

265.47 |

67.54 |

117,504 |

144% |

233.23 |

116,125 |

110% |

484 |

116,170 |

111% |

| 8 |

16,777,216 |

1067.37 |

262.17 |

518,954 |

150% |

1149.60 |

511,784 |

110% |

2414.94 |

511,715 |

110% |

| 9 |

134,217,728 |

4286.96 |

1502.63 |

2,491,289 |

149% |

9890.55 |

2,452,118 |

108% |

24578.45 |

2,452,855 |

109% |

Node Size Compensation

HeuristicScale = 1.0bUseUnitCost = falsebUseNodeCompensation = true

| # Layers |

# Voxels |

Generation Time (ms)

|

A* |

Lazy Theta* |

Theta* |

| Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

| 4 |

4,096 |

7.45 |

2.09 |

1,513 |

128% |

2.93 |

1,516 |

102% |

3.53 |

1,511 |

100% |

| 5 |

32,768 |

11.52 |

10.47 |

13,225 |

121% |

20.70 |

13,280 |

101% |

33.54 |

13,273 |

100% |

| 6 |

262,144 |

57.99 |

44.55 |

56,673 |

130% |

105.42 |

57,065 |

108% |

188.83 |

56,907 |

107% |

| 7 |

2,097,152 |

261.01 |

159.37 |

184,989 |

129% |

384.13 |

174,653 |

117% |

714.12 |

170,859 |

115% |

| 8 |

16,777,216 |

1099.90 |

500.27 |

660,749 |

162% |

1332.56 |

563,422 |

121% |

2598.52 |

616,339 |

125% |

| 9 |

134,217,728 |

4275.72 |

2877.39 |

2,542,330 |

146% |

12377.01 |

2,595,133 |

124% |

26376.67 |

2,616,811 |

124% |

Unit Cost Nodes and Node Size Compensation

HeuristicScale = 1.0bUseUnitCost = truebUseNodeCompensation = true

| # Layers |

# Voxels |

Generation Time (ms)

|

A* |

Lazy Theta* |

Theta* |

| Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

| 4 |

4,096 |

7.49 |

1.61 |

911 |

151% |

2.00 |

910 |

112% |

2.47 |

910 |

111% |

| 5 |

32,768 |

11.64 |

4.77 |

7,257 |

153% |

9.97 |

7,228 |

107% |

17.28 |

7,229 |

106% |

| 6 |

262,144 |

53.11 |

19.00 |

33,000 |

176% |

50.07 |

32,640 |

110% |

96.89 |

32,647 |

110% |

| 7 |

2,097,152 |

272.20 |

64.68 |

103,017 |

170% |

185.21 |

103,698 |

121% |

354.72 |

103,717 |

121% |

| 8 |

16,777,216 |

1267.24 |

271.63 |

360,523 |

184% |

727.31 |

365,728 |

127% |

1336.97 |

365,720 |

127% |

| 9 |

134,217,728 |

4266.87 |

1057.10 |

1,417,913 |

182% |

2794.43 |

1,426,194 |

128% |

6686.54 |

1,426,159 |

128% |

5x Heuristic Weighting

HeuristicScale = 5.0bUseUnitCost = falsebUseNodeCompensation = false

| # Layers |

# Voxels |

Generation Time (ms)

|

A* |

Lazy Theta* |

Theta* |

| Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

| 4 |

4,096 |

7.83 |

1.90 |

1,108 |

135% |

2.34 |

1,022 |

102% |

2.80 |

1,015 |

101% |

| 5 |

32,768 |

11.89 |

6.81 |

7,949 |

132% |

12.21 |

7,806 |

105% |

19.49 |

7,816 |

104% |

| 6 |

262,144 |

53.04 |

27.24 |

34,801 |

128% |

61.31 |

34,686 |

105% |

110.82 |

34,804 |

105% |

| 7 |

2,097,152 |

259.69 |

100.97 |

124,194 |

124% |

251.58 |

124,056 |

105% |

481.21 |

124,030 |

104% |

| 8 |

16,777,216 |

1064.77 |

368.79 |

542,751 |

123% |

1179.15 |

542,808 |

104% |

2324.22 |

542,652 |

104% |

| 9 |

134,217,728 |

4358.30 |

2027.02 |

2,578,450 |

123% |

8731.57 |

2,580,471 |

104% |

23427.42 |

2,579,942 |

104% |

10x Heuristic Weighting

HeuristicScale = 10.0bUseUnitCost = falsebUseNodeCompensation = false

| # Layers |

# Voxels |

Generation Time (ms)

|

A* |

Lazy Theta* |

Theta* |

| Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

| 4 |

4,096 |

7.53 |

1.80 |

975 |

135% |

2.26 |

956 |

107% |

2.71 |

950 |

107% |

| 5 |

32,768 |

11.83 |

6.48 |

7,500 |

137% |

11.75 |

7,487 |

105% |

18.46 |

7,472 |

105% |

| 6 |

262,144 |

54.94 |

26.42 |

33,543 |

132% |

58.88 |

33,605 |

104% |

105.72 |

33,633 |

104% |

| 7 |

2,097,152 |

266.61 |

97.34 |

119,126 |

126% |

257.39 |

120,044 |

105% |

473.18 |

119,945 |

105% |

| 8 |

16,777,216 |

1062.95 |

361.79 |

525,988 |

132% |

1161.01 |

528,216 |

106% |

2221.15 |

527,191 |

105% |

| 9 |

134,217,728 |

4312.08 |

2501.81 |

2,491,091 |

129% |

11184.10 |

2,502,519 |

103% |

23961.32 |

2,510,228 |

103% |

50x Heuristic Weighting

HeuristicScale = 50.0bUseUnitCost = falsebUseNodeCompensation = false

| # Layers |

# Voxels |

Generation Time (ms)

|

A* |

Lazy Theta* |

Theta* |

| Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

| 4 |

4,096 |

7.41 |

1.73 |

918 |

142% |

2.19 |

912 |

109% |

2.60 |

913 |

109% |

| 5 |

32,768 |

10.41 |

6.37 |

7,273 |

149% |

11.53 |

7,296 |

108% |

18.22 |

7,294 |

107% |

| 6 |

262,144 |

56.18 |

26.10 |

33,040 |

155% |

59.41 |

32,857 |

110% |

107.89 |

32,855 |

110% |

| 7 |

2,097,152 |

264.17 |

96.50 |

117,471 |

146% |

257.28 |

117,047 |

109% |

496.25 |

117,040 |

109% |

| 8 |

16,777,216 |

1257.29 |

453.45 |

516,538 |

146% |

1568.65 |

515,013 |

108% |

3205.76 |

515,040 |

108% |

| 9 |

134,217,728 |

4346.97 |

2513.02 |

2,470,447 |

144% |

10895.58 |

2,463,656 |

106% |

23037.96 |

2,464,780 |

106% |

10x Heuristic Weighting and Unit Cost Nodes

HeuristicScale = 1.0bUseUnitCost = truebUseNodeCompensation = false

| # Layers |

# Voxels |

Generation Time (ms) |

A* |

Lazy Theta* |

Theta* |

| Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

| 4 |

4,096 |

8.27 |

1.56 |

910 |

151% |

1.98 |

910 |

112% |

2.41 |

910 |

111% |

| 5 |

32,768 |

18.26 |

4.64 |

7,230 |

149% |

9.86 |

7,231 |

109% |

17.14 |

7,231 |

107% |

| 6 |

262,144 |

51.81 |

18.01 |

32,666 |

163% |

51.85 |

32,598 |

110% |

102.05 |

32,598 |

110% |

| 7 |

2,097,152 |

253.45 |

66.90 |

116,275 |

167% |

229.97 |

116,007 |

110% |

467.92 |

116,012 |

111% |

| 8 |

16,777,216 |

1078.94 |

258.58 |

513,264 |

165% |

1131.15 |

511,406 |

108% |

3015.29 |

511,399 |

108% |

| 9 |

134,217,728 |

4288.11 |

1853.16 |

2,459,858 |

157% |

10238.10 |

2,449,716 |

105% |

23367.65 |

2,449,716 |

105% |

10x Heuristic Weighting and Node Size Compensation

HeuristicScale = 10.0bUseUnitCost = falsebUseNodeCompensation = true

| # Layers |

# Voxels |

Generation Time (ms) |

A* |

Lazy Theta* |

Theta* |

| Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

| 4 |

4,096 |

7.34 |

1.79 |

975 |

135% |

2.26 |

956 |

107% |

2.62 |

950 |

107% |

| 5 |

32,768 |

11.39 |

6.57 |

7,499 |

138% |

11.83 |

7,484 |

105% |

18.56 |

7,466 |

104% |

| 6 |

262,144 |

61.60 |

27.53 |

33,776 |

134% |

60.05 |

33,610 |

104% |

107.44 |

33,641 |

104% |

| 7 |

2,097,152 |

267.13 |

90.43 |

101,188 |

173% |

224.94 |

99,847 |

121% |

433.79 |

100,202 |

117% |

| 8 |

16,777,216 |

1098.10 |

278.71 |

355,838 |

187% |

721.82 |

361,459 |

130% |

1327.04 |

363,007 |

126% |

| 9 |

134,217,728 |

4279.99 |

1390.06 |

1,491,350 |

187% |

5642.72 |

1,501,437 |

130% |

11290.54 |

1,500,582 |

127% |

10x Heuristic Weighting, Unit Cost Nodes and Node Size Compensation

HeuristicScale = 10.0bUseUnitCost = truebUseNodeCompensation = true

| # Layers |

# Voxels |

Generation Time (ms) |

A* |

Lazy Theta* |

Theta* |

| Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

Time (ms) |

Iterations |

Distance |

| 4 |

4,096 |

8.06 |

1.83 |

910 |

151% |

2.06 |

910 |

112% |

2.44 |

910 |

111% |

| 5 |

32,768 |

10.34 |

4.74 |

7,228 |

153% |

9.94 |

7,229 |

107% |

17.54 |

7,229 |

106% |

| 6 |

262,144 |

52.26 |

18.81 |

32,670 |

166% |

50.90 |

32,603 |

110% |

96.54 |

32,604 |

110% |

| 7 |

2,097,152 |

257.24 |

65.39 |

103,680 |

177% |

181.03 |

103,781 |

121% |

352.56 |

103,781 |

121% |

| 8 |

16,777,216 |

1092.49 |

218.64 |

365,329 |

181% |

565.96 |

365,942 |

127% |

1054.23 |

365,940 |

127% |

| 9 |

134,217,728 |

4294.01 |

1344.35 |

1,425,086 |

181% |

3600.87 |

1,426,346 |

128% |

6796.15 |

1,426,343 |

128% |

Raycast Benchmarks

Remarks

The Physics Line Trace obviously doesn't depend on the octree while the raycasting does.

The octree raycast is used for the line-of-sight checks in the pathfinding algorithm.

I wasn't able to get the raycast time to increase as expected with the layer count, so everything past 5 layers is within the margin of error.

It's clear that the algorithm is very efficient, consistently being 3x faster than a physics line trace.

It should be noted however that both are very fast and that the Physics Line Trace is more flexible.

| # Layers |

# Voxels |

Generation Time (ms) |

Octree Raycast Time (μs) |

Physics Line Trace Time (μs) |

| 4 |

4,096 |

7.45 |

0.20 |

2.05 |

| 5 |

32,768 |

11.52 |

0.68 |

2.04 |

| 6 |

262,144 |

57.99 |

0.70 |

2.15 |

| 7 |

2,097,152 |

261.01 |

0.64 |

2.02 |

| 8 |

16,777,216 |

1099.9 |

0.57 |

1.63 |

| 9 |

134,217,728 |

4275.72 |

0.77 |

2.16 |